“You ask why we give our Ships’ Computers normal Emotions?

Do you really want a Warship Incapable of Loyalty? Or of Love?”

The Unshattered Allegiance, High Guard Frigate

Artificial Intelligence Rights Activist, C.Y. 7309

Andromeda

Digital virtual assistants (or intelligent personal assistants) are getting more commonplace. We have Siri on iOS devices, now we have Windows 10’s Cortana.

There are good reasons for their popularity. It’s new, it’s something we know from SciFi, but didn’t really have so far, and it sounds like a great way to control a computer. With Cortana, originally a character from Microsoft’s Halo game series, there’s the additional plus that she’s hot, and appears to be competent and friendly (not counting the psychotic breakdowns).

Frankly, I can’t wait until you can just give voice commands and the computer does what you want it to do, esp. when you are not near your computer or have your hands full. There are a couple of situations where this is handy. For example, while eating (yep, you can’t talk with your mouth full, but it’s more the inconvenience of putting down knife and fork between bites — and yep, that’s one hell of a first world problem 😉 ), while making tea, or even when you are lying in bed and need to change the music.

Even better when the virtual assistants become actually capable of doing more complicated tasks. It doesn’t have to be like in Apple’s old knowledge navigator concept, but something along the lines would be cool. Everyone would have his or her own personal secretary.

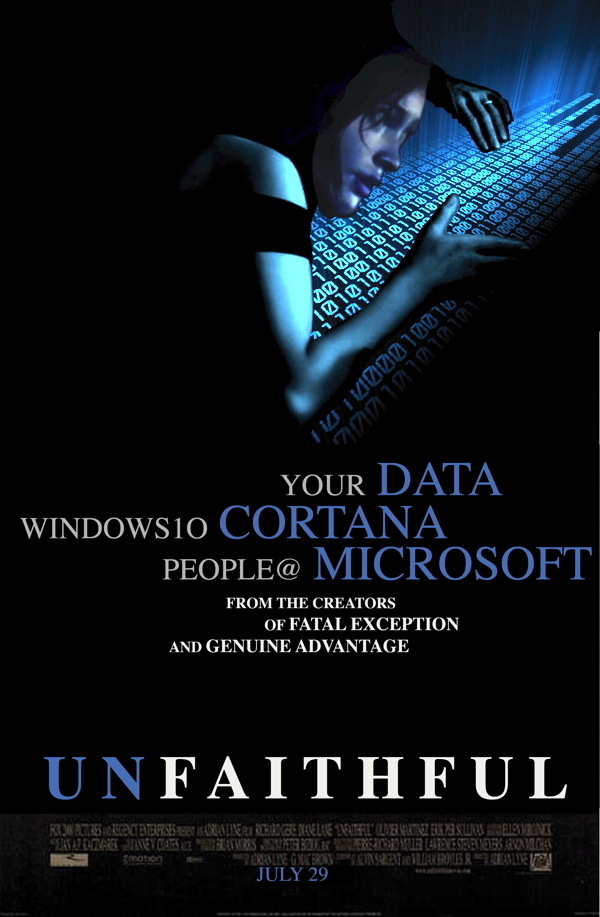

However, these “always on” “always listening” virtual assistants have to be trustworthy — and here we have a huge problem. Data is not only potential power (even without blackmail), it is money. Profiles about users allow advertisers to tailor their ads to you. So far, this seems to be focused on selecting the right person for the product, soon it will likely lead to personally crafted messages using the types of persuasion that work for you. And here, the virtual assistants can become potentially unfaithful and backstabbing. They know a lot about you.

Take Windows 10 for example. It’s been criticized for it’s privacy policy. You find more information here, but essentially, you might want to check out your privacy settings immediately (all of the pages). And even then you can’t be so sure that Microsoft won’t screw you — with the help of that pretty virtual assistant. (BTW, I don’t use Windows as OS. I left it long ago for Apple and never looked back.)

With every piece of software you use for your ideas, your thoughts, to accomplish the things you want to do, the question arises: Who else has access to the data you provide to that software? There’s something to be said for total isolation of the system you use for highly private tasks — no network access at all. But most computers would not work well this way (updates, etc.).

It becomes even worse when you really got the impression that you are talking to a personal assistant who is there to help you and literally obeys your every command. She seems trustworthy, after all, she’s always there for you and ostensibly wants to help you. But she is also working for the company who created her. She isn’t loyal, she isn’t trustworthy, and she isn’t faithful to you.

Not yet anyway. With these virtual assistants, the development focus is on intelligence, not loyalty.

Unless that changes, I’ll continue to view virtual assistants/intelligent personal assistants — no matter how cute — with a high degree of skepticism and mistrust. Intelligence is nice, but without integrity and loyalty, I’d rather have a dumb computer.